Our

methodology

Research.com adheres to high standards and transparent procedures based on well-established metrics in order to produce a wide range of rankings for the research community in a variety of disciplines.

Research.com adheres to high standards and transparent procedures based on well-established metrics in order to produce a wide range of rankings for the research community in a variety of disciplines.

In Research.com, we wanted our rankings to be suited to our recipients from the very beginning. To this end, we designed our methodology in such a way that it answers the most common students' needs. In particular, we asked ourselves:

In the Best Colleges ranking, we evaluate institutional performance across several key dimensions that together describe the overall quality of the college. These dimensions capture research strength, academic environment, student experience, post-graduation outcomes, and affordability.

The scored values are grouped into thematic areas and normalized to ensure comparability across institutions of different sizes and profiles.

The research area describes the research activity and scholarly impact of the institution. We take into account four primary indicators: governmental grants per faculty member, private grants per faculty member, the Hirsch index (H-index), and the citedness score.

Grant amounts are normalized by the number of faculty members to allow fair comparison between institutions of different sizes. Higher values indicate stronger research funding and greater scholarly influence. All research-related data are sourced from the OpenAlex database.

The academic quality area reflects both student preparedness and institutional teaching capacity. It includes the faculty-to-student ratio, which measures the level of individual academic attention students may receive, and the student retention rate, which reflects satisfaction and continuity of education.

While entry exam scores and faculty compensation are evaluated as part of the dataset, their influence on the final ranking depends on their statistical variability in a given ranking cycle.

The campus life area describes factors that contribute to student well-being and quality of life outside the classroom. These include the availability of entertainment and extracurricular activities, as well as the presence of student support services such as health clinics, counseling centers, and legal or academic assistance.

Student outcomes measure institutional success after graduation. This area includes alumni salaries, the working (employment) ratio, and the acceptance rate, which serves as an indicator of institutional selectivity and competitiveness.

The total cost of attendance is included as an inverted metric. Institutions with lower costs receive higher scores, reflecting the importance of affordability in higher education decision-making.

All numerical values are scaled linearly to a common range prior to aggregation. For indicators where lower raw values indicate better performance, such as total cost, the scaling is inverted accordingly.

The scaled indicators are then combined using the Entropy weighting method1. This approach assigns higher weights to indicators that exhibit greater variation across institutions, as such indicators provide stronger differentiation between colleges. As a result, the assigned weights are data-dependent and may vary slightly between ranking editions.

1 Zou, Z. H., Yi, Y., & Sun, J. N. (2006). Entropy method for determination of weight of evaluating indicators in fuzzy synthetic evaluation for water quality assessment. Journal of Environmental Sciences, 18(5), 1020–1023.

Based on the current dataset, the entropy-derived weights for the Best Colleges ranking are as follows:

Indicators such as entry exam scores, faculty compensation, internationality, and campus safety received a weight of 0% in the current ranking cycle due to insufficient variation across institutions. This does not imply that these factors are unimportant, but rather that they did not statistically differentiate institutions in the analyzed dataset.

Apart from the Best Colleges ranking, we also publish several complementary rankings derived using consistent principles:

Best Public Colleges and Best Private Colleges rankings are subrankings of the main Best Colleges ranking, filtered by institution type.

The Most Popular Colleges ranking is based on applicant volume, reflecting institutional demand and competitiveness.

The Most Affordable Colleges ranking is designed for students seeking high-quality education at a reasonable price. This ranking combines strong overall institutional performance with lower total cost.

The Best Value Colleges ranking is based on return on investment, defined as alumni earnings divided by total cost of attendance.

When evaluating stationary (on-campus) programs, we focus on program-specific characteristics. Campus amenities and safety indicators are excluded, as they do not depend on the program itself. However, broader institutional quality remains relevant and is therefore included through the overall institution score.

Each program is evaluated across four areas: students, costs, research, and institution.

The students area includes entry exam scores, program enrollment size, student retention, and applicant trends over the past five years. These indicators reflect program demand, continuity, and academic preparedness.

The costs area includes median earnings after completion, total program cost, and cost trends over time. Lower costs and higher earnings result in higher scores. Financial aid is excluded, as it is institution-specific and already reflected in the institution score.

Research performance is evaluated at the discipline level. We consider the total number of publications in the discipline over the last five years, the total number of citations received, and the mean citations per paper.

The institution score represents the overall institutional quality derived from the Best Colleges ranking and captures factors that benefit students regardless of their chosen program.

The current entropy-derived weights for stationary programs are:

Online programs are evaluated using a methodology adapted to distance learning. Campus-related indicators are excluded, while factors related to program structure and student support are emphasized.

Programs are evaluated across five areas: students, costs, research, course, and institution.

The course score is specific to online programs and includes program length, availability of learning resources, and student support services. Shorter programs score higher, reflecting efficiency and time-to-completion.

The current entropy-derived weights for online programs are:

In addition to the Best Online Programs ranking, we publish:

For the ranking of top scientists (launched initially in 2014), the inclusion criteria for scholars to be considered into the ranking are based on their Discipline H-index (D-index), proportion of their contributions made within a given discipline as well as the awards and achievements of a scientist in specific areas. The D-Index is used to rank scholars in descending order combined with the total number of citations.

What is D-index?

The H-Index is an indicative measure which reflects the number of influential documents authored by scientists. It is computed as the number h of papers receiving at least h citations [3]. The H-index and citation data we use are obtained from various bibliometric data sources. The Discipline H-index (D-index) is calculated by considering only the publications and their citation values deemed to belong to an examined discipline.

To ensure fair ranking in all disciplines in addition to D-index, we also consider the number of publications in journals and conferences ranked and classified in an examined discipline. Scientists should have a consistent ratio of publications in discipline-ranked venues against their D-index. The bibliometric ratio gives an indication of contributions pertinent to a given discipline.

Besides the use of citation-based metrics, we also conduct rigorous searches for each scientist to inspect and include the awards, fellowships and academic recognitions they have received from leading research institutions and government agencies.

The D-index threshold for accepting a scientist to be listed is set as an increment of 10 depending on the total number of scientists estimated for each discipline. The D-index threshold ensures that the top 1% of leading scientists are considered into the ranking among all scholars and researchers belonging to the discipline. There should be a proximity of 30% or less between the scientist global H-index and their D-index.

Without doubt, numbers are never meant to be an obsolete measure to quantify the precious contributions of scientists, but a threshold value of 40 is a recommendation reported by J. E. Hirsch in his h-index paper where he suggests that a h-index of 40 characterizes outstanding scientists [1].

Due to the need to establish a transparent framework for ranking universities based on objective and well-established metrics for different disciplines, Research.com is the only ranking platform that capitalizes the human as a valuable asset to research and educational institutions offering its data and procedures publicly in a completely transparent way.

The ranking provided for universities by Research.com is based purely on the reputation of its scholars. We highly believe that companies should be valued based on the talents and reputation of its staff.

Because the ranking problem is compound by subjectivity between different experts on defining and perceiving the quality and impact of educational institutions, major companies providing mainstream rankings do not fully elaborate their ranking procedures nor do they offer their raw data. Besides, existing university rankings rely mostly on declarative and subjective analysis of data.

The first edition of the Research.com university ranking was released in 2020 covering over 591 research institutions and was limited to the area of computer science. In 2022 university rankings for all major scientific disciplines were released. The ranking of universities across research disciplines is based on simple metrics highly related to the reputation of academic staff in addition to research outputs as elaborated above.

Based on cross-matching analysis, the ranking of universities provided by Research.com correlates consistently for the case of top universities with mainstream rankings maintained by leading companies with decades of experience in the field. This includes QS, USNews and TimesHigherEducation (THE).

Research.com offers a list of the best journals for various disciplines that are selectively reviewed annually based on a number of indicators related to the quality of accepted papers and reputation of the journal.

As there is no panacea in finding the magical metric or the analytical tool to produce a consensual score to please all experts from different disciplines, Research.com adopted a strict policy for indexing journals based on the following metrics.

is a novel bibliometric indicator that quantifies the endorsement level of the best and well-respected scientists for a given journal. The score is estimated using two factors for data published during the last four years using the Microsoft Academic data:

H-IndexValue: Estimated h-index from publications made

solely by the best scientists.

NumberTopScientists: Number of scientists who have

published in the journal and have contributed to the H-indexValue.

is a measure that reflects the average number of citations an article in a particular journal would receive per year. It is computed as the sum of citations for all journal documents published during the two preceding years divided by the total number of articles published during the same period. This is elaborated in the following equation:

The impact factor is computed by the Web of Science and updated annually [5].

is a metric developed by SCIMAGO research laboratory using Scopus data [6]. The measure indicates the scientific influence or prestige of an academic journal based on two factors which are the number of citations in tandem with the source of where the citations come from.

Journals from a publishing house that requires an APC to be paid by authors to publish their research, are reviewed on a case by case basis to ensure that they are not predatory publishers or vanity press whose main goal is to make a profit at the expense of quality and research contributions [7].

The editor-in-chief and members of the editorial board for the journal are also inspected against different bibliometric sources to ensure that the journal is led by experts in the area of research related to the journal.

Research.com indexes major conferences in various disciplines of research. The ranking of the best conferences is based primarily on the Impact Score indicator computed using the number of endorsements by leading scientists. Further valuable indicators are considered whilst assessing conferences including the indexing, sponsoring bodies, number of editions and the profiles of its steering committees.

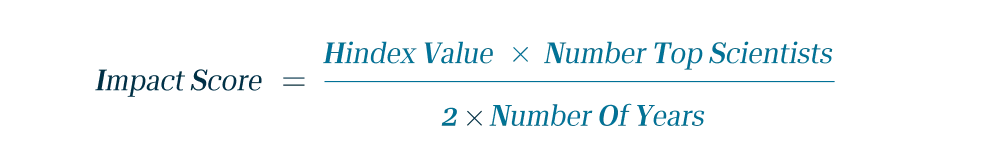

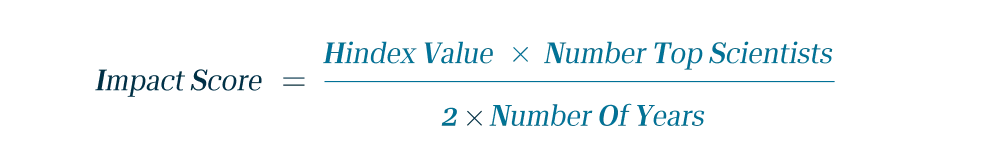

A novel metric called Impact Score is devised to rank conferences based on the number of contributing the best scientists in addition to the h-index estimated from the scientific papers published by the best scientists. The score is estimated using two factors for data published during the last four years using the Microsoft Academic data:

H-IndexValue: Estimated h-index from publications made solely by

the best scientists.

NumberTopScientists: Number of scientists who have published in

the journal and have contributed to the H-indexValue.

For the technical sponsor and indexing of conference proceedings, the majority of the best conferences indexed by Research.com are sponsored or indexed by leading and well-respected publishers and academic organizations including IEEE, ACL, Springer, AAAI, USENIX, Elsevier, ACM, OSA and LIPIcs.

References